The adoption of AI changes business operations, customer relationships, and strategic planning. Surprisingly, it could be for better or worse. While companies launch their AI projects to optimize their back-office operations and gain momentum in the competitive market, such endeavors often end up wasting time and money.

So, why do AI projects fail? We pulled in statistics, research papers, and expert opinions so you can learn from others’ mistakes without making your own. Explore 20 common pitfalls on the way to AI adoption and get practical recommendations for successful project delivery.

AI rush and scale of failure

Artificial intelligence has opened lucrative opportunities for business automation, advanced analytics, and informed decision-making. This potential translates into real metrics, such as increased ROI, more transactions, improved employee productivity, and shorter time to market. No wonder organizations across the industries began to implement AI solutions into their internal systems and products: agentic AI attracted 265% more venture capital investment from Q4 2024 to Q1 2025.

The staggering truth is that around 80% of AI projects fall flat, with 95% of them unable to deliver any ROI. Failure can lurk at every stage, from unclear business strategy and poor management to insufficient data quality and product rejection by end-users. Additionally, there are many AI-related risks companies continue to struggle with, such as outcomes inaccuracy, cybersecurity, and intellectual property infringement. Altogether, they weave a complex landscape, the ability to navigate which makes a clear divide between success and failure.

What percentage of AI projects fail?

- Up to 30% of GenAI projects may be abandoned after proof of concept (PoC) due to poor data quality, unclear business value, or inadequate risk controls.

- 40% of agentic AI projects will be cancelled by the end of 2027 due to growing costs, undefined business utility, and insufficient risk management, Gartner predicts.

- Only 48% of AI initiatives reach production, a process that typically spans about 8 months.

Nevertheless, such gloomy statistics don’t decrease companies’ enthusiasm and seeking for innovation. 87% of those who have adopted or plan to adopt GenAI were expected to increase their investments in it in 2025. Thus, it’s vital to understand how to translate AI’s enormous potential into measurable results and address the root causes of failure.

Users Don’t Always Need AI

Over the past two years, AI has been advancing at an incredible pace, creating a unique situation where technological development is moving much faster than adoption. And I’m not talking about corporate adoption – companies are eager to experiment with AI because it promises lower costs and faster delivery, both essential for revenue growth. What lags behind, however, is user adoption of AI-powered features.

For most users, AI itself is not the value – they don’t need “AI” per se. What they need are better, more useful experiences that AI can help deliver. But AI alone doesn’t sell. Products must offer what users actually want. There are even jokes about the latest waves of hype, like MCP, where experts say it’s one of those rare cases where there are more builders than users.

In my view, the most common reason AI projects fail is simple: users don’t need them. Investors might need them, CEOs might need them – but users don’t. That’s why it’s crucial not to forget the fundamentals: market research and user research.

Why do AI projects fail: the core reasons

Vague strategy, data issues, inadequate infrastructure, and poor governance are the main difficulties for businesses that want to build successful AI projects. Let’s take a closer look at all the peculiarities.

Strategy and goal misalignment

It all starts with planning. AI initiatives should be designed to address a company’s specific problems and be integral components of a broader business strategy. For each initiative, the organization prepares a particular business case to outline the project’s goals, justification, and success criteria.

In reality, many projects’ objectives deviate from the prior business plan. It often happens when company owners launch AI projects only because it is a trendy topic, or when stakeholders want different outcomes from a single solution. It’s not surprising that 92% of leaders are concerned that their AI pilots are developing without addressing prior business issues.

Misunderstanding the problem

The lack of understanding what problems AI should solve or misalignment with customers’ pain points results in a flawed strategy, wasted resources, and products that no one needs or wants to use. The main mistakes include focusing on the wrong metric, misinterpreting requirements, and overlooking context and limitations.

Sometimes, it’s simply a matter of miscommunication between stakeholders, managers, and technical teams that contributes to the failure of AI projects. As a business leader, you should ensure that the development team understands the software’s purpose, domain context, and focuses on solving a real problem.

Lack of clear strategy and ROI

AI projects require a defined roadmap that focuses not on technical success, but on business impact. Nevertheless, 97% of leaders who are considering or already using AI report difficulties in demonstrating business value, indicating planning challenges and a focus on model accuracy rather than ROI.

Mindlessly following AI trends can be misleading. There are neither clear success metrics nor measurable, data-driven tasks embedded in such an approach. Even if such a product is launched, it will eventually fail to deliver tangible business value, as it wasn’t clearly defined at the beginning.

Focus on technology, not solution

Shifting attention from business to technical intricacies is the way to build a technically impressive model that is irrelevant to stakeholders and pointless to end users. The thing is that not every optimization effort requires AI implementation. Business owners should recognize that AI is not always the best method to solve operational problems.

Overpromising and feasibility

The hype around AI has made businesses believe that it’s a silver bullet for issues ranging from process optimization to strategic decision-making. When companies try to apply it to solve problems that are actually too difficult for AI to solve, they witness an inevitable project failure. In addition, they often underestimate the true cost and complexity of technology implementation. Such mistakes result in inaccurate project planning, hidden costs, integration problems, and compliance risks.

Artificial intelligence is not omnipotent. Understanding its constraints is the first step to creating a viable tool that would actually deliver expected benefits. Inclusion of the technical experts to assess the project’s feasibility, a clear definition of business problems, and data availability analysis would increase the chances of success.

Data constraints

Problems with data used for model training is one of the major reasons why AI projects fail. The research predicts that 60% of AI projects that are not supported by AI-ready data will be abandoned in 2026.

Insufficient data quantity

A specific AI solution needs large volumes of relevant training data. For example, you cannot get correct identifications of rare diseases with the help of AI if your model has analyzed only 200 relevant MRI scans. Limited data hinders pattern recognition, general patterns learning, and capturing a wide range of possible scenarios.

Lack of data for AI training results in the inability to deliver outcomes at scale, making predictions unfair, inaccurate, and unreliable. Such models underperform in real-world scenarios, fail to recognize rare patterns, and produce highly variable outputs. Businesses relying on such results can make wrong decisions on pricing, underwriting, customer retention strategies, and more.

Poor data quality

An old “Garbage in, garbage out” concept is still relevant when we talk about AI training. It means that incomplete, inaccurate, or poor-quality data lead to unreliable, biased, or faulty results. Still, many organizations, in their pursuit of emerging technologies, often ignore data quality and treat it as an afterthought. Thus, it’s hardly surprising that 56% of survey respondents report data reliability as the number one challenge when advancing gen AI pilots.

Failures emerge from bad data quality:

- Overfitting. The model adheres too strictly to the algorithm without learning general rules, captures both actual patterns and noise, and overlooks any untrained data. It occurs when you use small or insufficiently diverse data for training.

- Ignoring edge-case scenarios. The algorithm disregards rare and unusual scenarios, failing in unexpected situations. The root cause of such behavior is the use of an imbalanced dataset used for training, where some categories are underrepresented.

- Model underperformance. The data used for training is too simplified and lacks essential features or patterns. The model turns out to be simple, unable to capture real relationships and present accurate outcomes.

- Data drift. Real-world data, such as market trends and regulatory requirements, evolve over time, but the model continues to operate according to old training data that no longer reflects current conditions.

- Correlation-driven errors. The system learns false patterns by training on data that contains misleading relationships. It makes incorrect assumptions and generates unreliable predictions.

Algorithmic bias

Biased training data, manual labelling, and algorithm design flaws create systems that produce systematically unfair, discriminatory, or skewed outcomes. Such datasets often reflect existing social, cultural, or structural biases, resulting in subjective findings. Let’s take the AI model for recruitment. If it were trained on data that features the majority of younger candidates, it would prioritize resumes of younger hires and downgrade candidates with longer work experience.

In some cases, subject experts are required to identify and eliminate such biases. The flawed models not only produce erroneous outcomes but also potentially carry regulatory, ethical, and reputational risks.

Confusing traditional and AI-ready data

Using traditional datasets without their preparation for AI requirements can lead to model underperformance, increased development times, and resource waste. Empty or outdated fields, inconsistent formats, and loads of unstructured data like images or text all contribute to unreliable outcomes.

Yet, when it comes to AI data preparation, many businesses take a shot in the dark. 63% of companies don’t have or are not sure whether they have the proper data management practices for AI model training.

Unlike traditional data management, which focuses on control and consistency, AI data management is more complex. It aims at learning, adaptability, and continuous improvement when handling both structured and unstructured data. AI data management requires the collaboration of cross-functional teams, continuous updates, and the use of modern data versioning tools and data warehousing.

Shifting from traditional data management to an AI-ready one is a gradual and iterative process that can vary from business to business. To prepare data, you can follow these tips:

- Match data to relevant AI use cases

- Define data governance requirements for AI

- Evolve the traditional data management framework

- Prepare data infrastructure for the AI model dataset

- Continuously validate and enrich data

Training vs. real-world data divide

Sometimes, the data that artificial intelligence was trained on differs significantly from the information it encounters after the deployment. Such AI projects fail as systems cannot adapt to changing conditions, which results in performance decline. The key problem is not only the use of historical data, which may have become irrelevant or does not represent the full diversity of actual cases. It is also a dynamic world, where conditions, patterns, and trends swiftly change, pressing businesses to continuously update their models.

Operational and infrastructure barriers

When pursuing AI benefits when launching the project, businesses often disregard the state of their infrastructure, software systems, and team competence, which may stall AI project development.

Inadequate infrastructure

Only 45% of organizations report that their AI projects last for three years or more, and all of them have a fitting AI infrastructure. In contrast, companies with static servers, no GPUs, and manual deployments are highly likely to stall their AI projects due to the lack of technical maturity. The absence of adequate infrastructure, e.g., CI/CD pipelines, modular architecture, cloud integration, containerization, and orchestration, leads to inaccurate, biased models that won’t help to achieve business goals.

Successful AI projects have:

- Powerful hardware for model training and experimentation

- Robust storage and data management infrastructure

- High-speed networking for efficient data exchange

- Modern software and tools for consistent reproducibility and scalability

- Scalable infrastructure for seamless deployment for real-time inference

Integration challenges

Integration obstacles go hand in hand with organizations’ reliance on legacy systems. The report indicates that 60% of AI leaders and representatives identify integration with outdated systems and concerns about risk and compliance as the primary challenges to AI adoption. The problem is that it’s equally challenging to collect reliable datasets from the scattered systems and deploy products in poor environments.

Old systems lack APIs or modern interfaces for AI integration. Outdated APIs are often poorly documented and cannot handle required data formats, protocols, or authentication methods. They fail to exchange different data types, which makes integration slow and prone to errors.

For a thriving AI product or tool, business leaders need to implement the API-first model.The approach involves an initial examination of how data will be integrated into technology through external APIs. After that, developers build fitting APIs, defining what fields, formats, and validation rules will apply to the collected information.

Resource underestimation

Many senior leaders underestimate the amount of time required to train the AI model so it can successfully solve business problems. They expect that such projects will take weeks, not months, to complete, disregarding the time and effort spent on data acquisition and cleaning, model experimenting, testing, and retraining. In reality, AI projects need from eight months to a year or more to complete and deliver reliable results.

Another point is the amount of investment such initiatives demand. First, they need ongoing funding for data acquisition, storage, cloud computing, and model training. Second, thought leadership often overlooks the costs beyond the initial development, such as maintenance, model retraining, and integrations. Third, AI model development requires high-performance computing resources to enable the system to run effectively under real-world workloads.

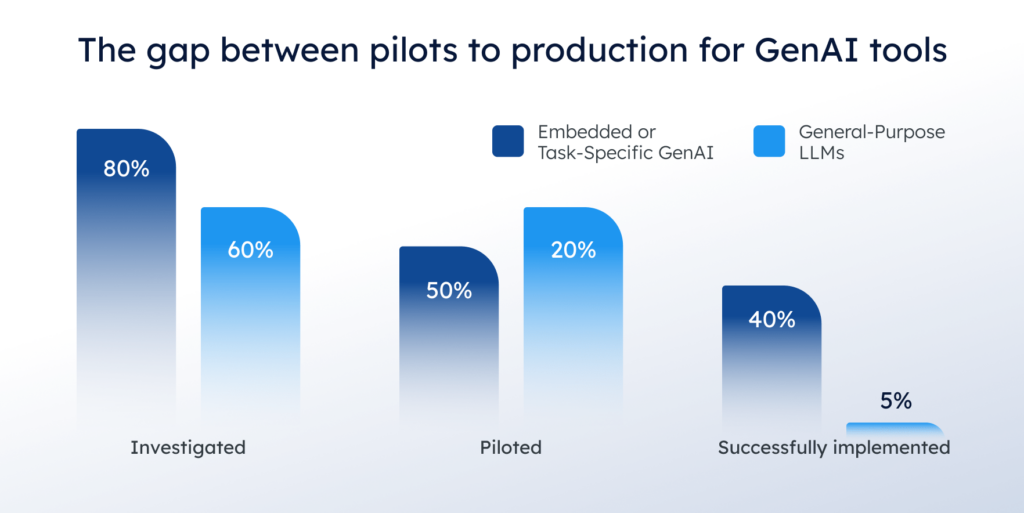

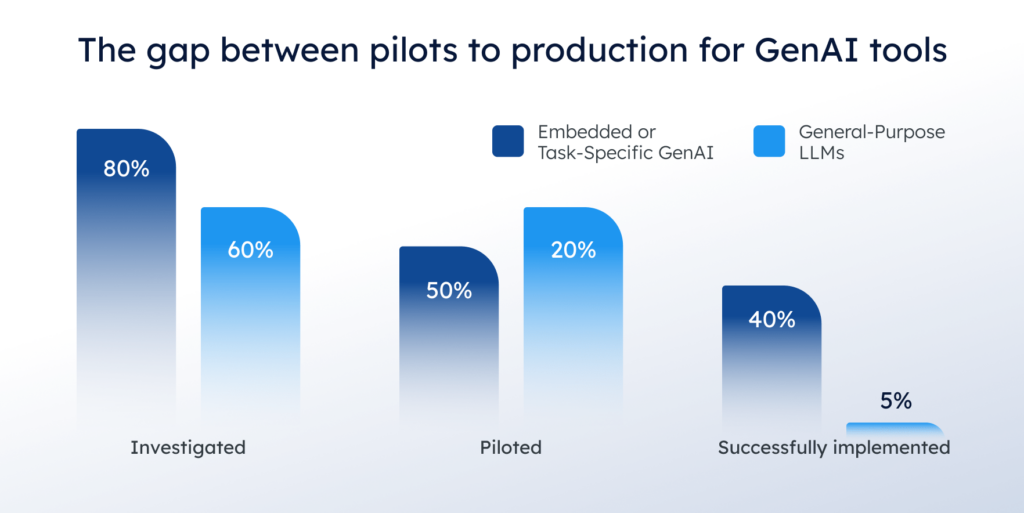

PoC vs. production gap

Companies build PoC of their AI solutions to test feasibility, but often fail at the production stage. Proof of concept and pilots often stall because of data, infrastructure, and scalability challenges. Additionally, AI tools lose accuracy every few months without proper maintenance and retraining.

How many AI projects fail at the PoC stage? As we mentioned earlier, this can be up to 30%. This problem hits custom enterprise solutions especially hard: 95% of them don’t reach production. The exception is generic LLM chatbots, with approximately 83% of pilot-to-implementation rates. Yet, they often fail in critical operations due to a lack of customization and insufficient memory.

Mistaking AI for app development

While both app development and AI development have coding and deployment, they have different development processes, success criteria, and lifecycle management. Confusing one with the other leads to unrealistic timelines and budgets, vague project goals, and frustration between technical and business teams.

Here are the main differences between AI and app development:

| Aspect | Traditional app development | AI development |

|---|---|---|

| Development essence | Rule-based and deterministic; follows explicit logic. | Research-based and probabilistic; learns patterns from data. |

| Project requirements | Clearly defined at the start. | Evolving and dependent on data and model performance. |

| Role of data | Data used mainly as input or content. | Data is central — it defines model behavior and accuracy. |

| Success criteria | Based on functionality and reliability. | Based on statistical performance. |

| Testing and validation | Verifies expected outputs. | Evaluates model performance on new data. |

| Team composition | Primarily software engineers and designers. | Data scientists, ML engineers, and domain experts. |

| Maintenance | Focus on bug fixes and feature updates. | Focus on continuous monitoring, retraining, and data updates. |

Neglecting maintenance

Unfortunately, AI models degrade over time. When you ignore their continuous maintenance, they start to produce unreliable outcomes, which can negatively impact business decisions. For example, a fraud detection system trained on last year’s transactions may miss new fraud patterns. Data, user behavior, environments, and market trends are changing, so the AI tools should also keep up with these shifts.

Businesses should regularly retrain and validate their models, use version control, and timely mitigate challenges emerging with time, such as model and data drift, new dependencies, and compliance updates.

Organizational and governance obstacles

Another cluster of issues that contribute to AI initiatives failure lies within the organization. From bad project management to poor communication, they hamper model development, training, and contribute to project failure or its inability to deliver measurable impact.

Internal silos

AI excels in dynamic and interconnected environments. Yet, many businesses still have legacy infrastructure, making it hard to innovate with the help of emerging technologies. The problem lies in:

- Data silos. There is no central hub for the organization’s information. Each department keeps its records in diverse systems and formats. You cannot use such data for AI training as it is scattered and requires cleaning, mapping, and reconciling.

- Process silos. Teams have different priorities, tools, and workflows, making it hard to collaborate and launch cross-functional activities, such as an AI project launch. Such initiatives require input from multiple units; otherwise, they are unlikely to make it to production.

- Knowledge silos. Data scientists and AI experts might create a technically sound agentic AI. However, if they lack contextual understanding of an organization’s strategic goals and daily operations, they won’t address real business problems.

Overall, disconnected processes, fragmented data, and a lack of collaboration are just other reasons why AI projects fail. Initiatives launched in such conditions either stall at the very beginning or fail during implementation.

Lack of ethical governance

Datasets collected for artificial intelligence model training often lack compliance and ethical governance. It can result in legal, ethical, and operational risks, as well as damage the business’s reputation. To guarantee legal compliance and clear practices, project leaders must confirm that the data their team employs is both legitimately acquired and openly utilized. It must be representative, fair, traceable, and auditable. Otherwise, organizations risk disclosing confidential information, their models might produce harmful outputs, unfair outcomes, or be discriminatory.

Security is another vital point of concern. Failing to secure your data can result in lawsuits, fines, and damage to your reputation. The numbers speak for themselves: the global average cost of a data breach has reached $4.4 million, while 97% of organizations reported AI-related security incidents. Following major regulatory frameworks in the field of data security, such as the GDPR, HIPAA, and the NIST AI Risk Management Framework is vital not only for successful AI model development but also for overall business prosperity.

Insufficient employee training/skills

Finding the right tech talent remains the major challenge and opportunity for businesses, as 90% of leaders acknowledged. AI professionals are no exception. Highly demanded AI skills include knowledge of gen AI, predictive analytics, large language models (LLMs), natural language processing (NLP), machine learning (ML), deep learning, and reinforcement learning. 94% of executives report AI skill shortages and are pessimistic about the future, expecting 20–40% gaps for critical roles.

Staff training is by no means less important than the model training. Without understanding how AI tools can improve existing processes, they won’t change them. In addition, always ensure that employees have enough time and resources to learn and clarify any emerging questions.

Perceived risks

Some companies, despite having a technically sound AI solution, fail to communicate its value to their intended users. Businesses present an “innovative AI tool,” forgetting to show its functionality, justify its reliability, and demonstrate its advantages. Customers, guided by perceived risks, may be reluctant to adopt AI features. They can have little information about a solution, no trust in AI feature efficiency, or lack of credibility in general.

For example, you built a smart chatbot for customer service. While it has bespoke business benefits in terms of better support availability, cost reduction, and consistent assistance, the customers may see AI support as unempathetic, low-quality, and frustrating, even before trying it out.

Vendor hype

AI solution providers often make exaggerated claims and marketing promises. They may mislead organizations about technology’s capabilities, such as ease of use or business impact. Influenced by vendor hype, the leaders may overestimate what AI can achieve in their specific domain or, otherwise, underestimate the resources and governance they need for success.

The problem goes deeper than unrealistic expectations and immediate results. Such vendor-driven enthusiasm can be more damaging than it appears. It can lead companies to neglect due diligence and skip governance checks, prioritize technology over business problems it is intended to solve, and overlook risks.

Recommendations for avoiding pitfalls

Building a successful AI tool that combines technical excellence with a high adoption rate and solves defined business problems requires preparation. The key elements include the high technological maturity of the organization, the availability of AI-ready data, laser-focused planning, and strong governance.

Top tips for running a successful AI project:

- Achieve strategic clarity and feasibility. Choose lasting business problems you want to solve, as AI takes time to develop. Check whether AI is a suitable technology to solve your challenge, ensuring it is neither too easy nor too complex for artificial intelligence to handle.

- Conduct cost-benefit analysis. Confirm that your project will have a measurable impact on P&L and calculate when to expect ROI, instead of going by assumptions not supported by real numbers.

- Focus on users’ problems, not technology. Explore users’ needs through interviews, surveys, or observation, and define their pain points, such as spending 2 hours on manual data extraction. Choose technology after validating the problem and solution.

- Invest in infrastructure. Make upfront investments in infrastructure to support model deployment and data governance, cut model development time, and increase the volume of high-quality datasets for training.

- Introduce AI data governance. If your company has a traditional data framework, you can add AI-specific data innovations iteratively. For example, RAG system deployment enables continuous data quality checks, rather than periodic reviews.

- Ensure ethical governance. Set standards for fair and transparent data collection, processing, and utilization. Adhere to compliance and continuously monitor data for bias or misuse.

If estimates show that your organization lacks capabilities for an AI initiative launch, you can use AI development services. Experienced providers will help you with project planning, management, and post-deployment maintenance. Companies like SoftTeco have a fitting, skilled talent pool, relevant infrastructure, and can build custom solutions without wasting resources on wrong decisions.

Conclusion

There are many reasons why AI projects fail. The key areas where businesses struggle include a scarcity of AI-ready data, a lack of governance, outdated infrastructure, and misalignment between strategy and project goals. Yet, organizations don’t lose their enthusiasm and continue investing in artificial intelligence software development.

MIT report shows that high technology adoption rates still outweigh transformation impact, highlighting a significant GenAI divide. The expected benefits are often uncalculated, overrated, and challenging to achieve. At the same time, the adoption rate of tools like ChatGPT and custom AI solutions is at an all-time high.

Fortunately, there are plenty of tactics to mitigate project risks. Many of them require gradual application, such as improvements of the current infrastructure, AI-ready data governance, and model updates. Others focus on the planning stage improvement: feasibility checks, ROI assessment, and alignment of project objectives with business goals.

Overall, businesses should not fall for the AI hype; instead, they should plan in advance and clearly define the value they want to achieve from the future system or tool, while also strengthening their technical maturity.

Comments