In the past years, we’ve been observing a rapid advancement of technology and its growing use across various domains, including banking. At the same time, the advancement of technology also means that fraudulent activities have become more sophisticated and now require much more powerful tools to confront them. The AFP 2025 Survey reports that 79% of companies suffered from actual or attempted payment fraud in 2024. And according to the report by GASA, the financial losses from international scammers count up to $1 trillion in 12 months as of 2024.

That being said, it’s obvious that both established banks and aspiring financial startups need to invest in powerful and next-gen fraud detection tools such as artificial intelligence-based ones. Below we’ll discuss the main idea behind AI fraud detection and real-life use cases of this technology in banking.

AI vs traditional methods

To understand the role of ai-based fraud detection in banking and the way it operates, it is essential to first look back at the traditional fraud detection processes and the reasons behind their flaws.

Traditional fraud detection

Before artificial intelligence entered the market, banks had been relying heavily on rule-based systems. As the name suggests, these systems operate on the basis of hard-coded rules that most often work by the “if, else” algorithm. In simple words, if a transaction meets any trigger defined in a rule, the system will flag the translation as fraudulent.

These rules can be based on the industry’s best practices and/or on the company’s historic data of fraudulent transactions. And while the whole system sounds quite secure and robust, there are several big issues with it.

First, rules are defined by people, meaning there is a chance of human error. As well, poorly defined rules mean poor security since the system functions strictly by these rules, no alterations allowed.

Second, fraudulent activities evolve on a regular basis, meaning, the rule-based system has to keep up. For that, companies will have to retrain their systems constantly in order for it to adapt to the changing landscape of fraudulent activities. Needless to say, this can be cumbersome and quite time-consuming.

The pros of rule-based systems:

- 100% transparency and explainability of the system since you can always know what events trigger alerts;

- No cold start: the system is fully operational right from the start;

- Easier entry: for developing such a system, you’ll need just a team of backend developers.

The cons of rule-based systems:

- The constant need for developing new rules in response to new fraudulent activities;

- Growth of the number of rules and hence, the amount of needed maintenance;

- Limitation of rules due to its manual definition and implementation.

AI-based fraud detection

Artificial intelligence, on the other hand, is able to automatically “learn” and adapt to new threats. Data scientists can also train the existing ML model on the new data without the need to redesign it. This is obviously a big advantage. Another great thing about the use of AI is its flexibility and automatic scaling: since there are no hard-coded rules, the model can actually “figure out” the reasons behind a certain event being fraudulent. Also, there are now many companies that provide excellent AI development services, meaning you won’t have to worry about assembling a team of data scientists.

The pros of AI-based systems:

- Automatic recognition of fraudulent patterns and adaptation to them;

- An ability to retrain existing models on new data without the need to reverse engineer the methods of fraudsters;

- Automation of the majority of processes involved in fraud detection and prevention;

- Efficient scalability and seamless work with massive data volumes.

The cons of AI-based systems:

- Requires a big amount of historical data to train on and to start work;

- The “black box” issue, meaning there might be a lack of transparency and explainability;

- Requires companies to hire data scientists to design and set up an ML model.

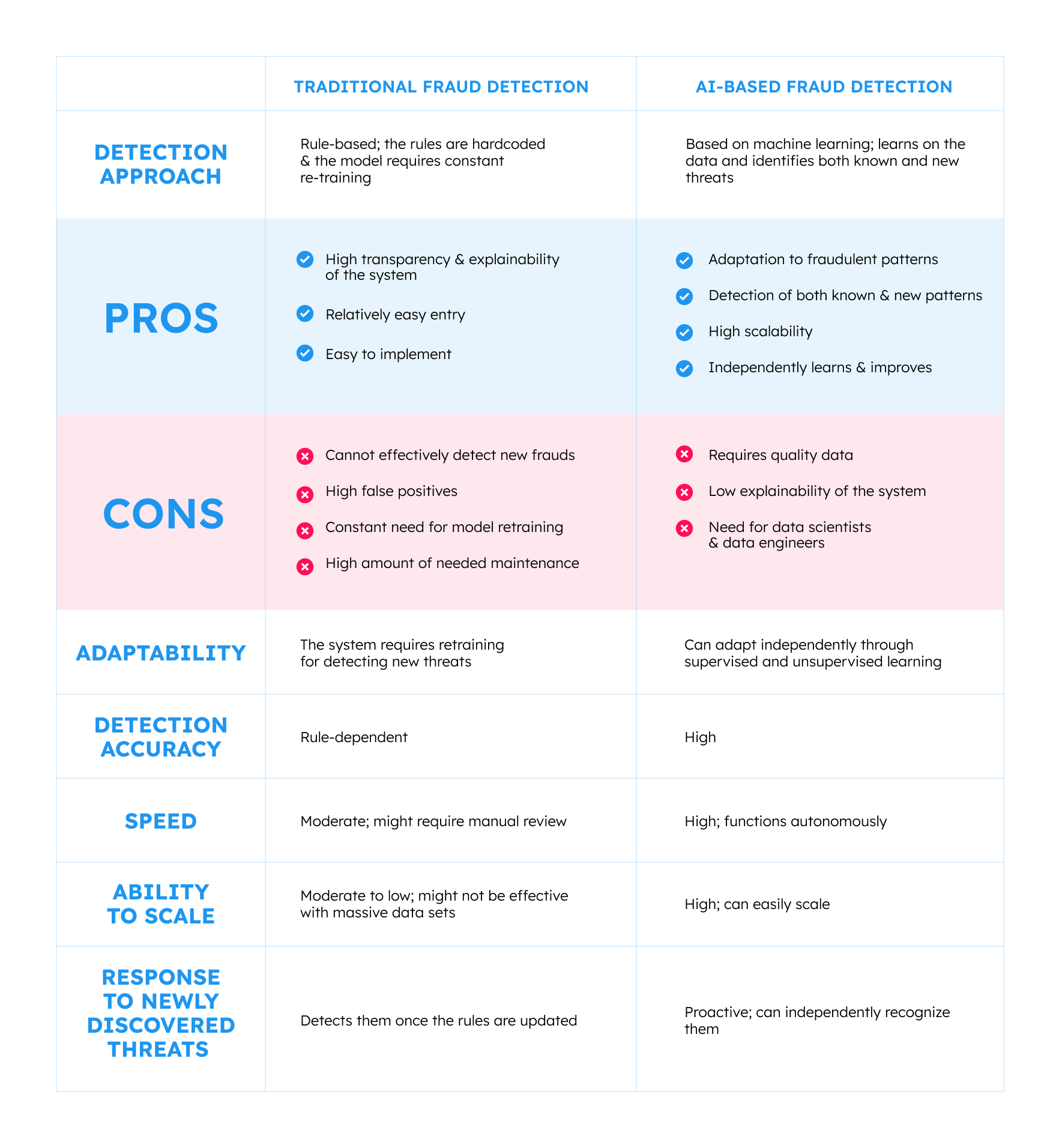

Traditional fraud detection vs AI-based fraud detection: a comparison table

Supervised vs unsupervised machine learning

Financial institutions deploy supervised and unsupervised machine learning to teach ML models how to recognize potential fraudulent activity and flag it.

Supervised machine learning

The supervised machine learning technique implies that ML models are trained on pre-labelled data sets. In this way, the model is “told”, which data is legitimate and which is fraudulent. The data sets usually contain both legitimate and fraudulent transitions and the goal of the ML model is to recognize suspicious transactions and independently detect them in the future. Examples of fraudulent transactions include flagged IP addresses or transfer to a fraudulent address. As a result of the training, an ML model can identify anomalies that match the known fraudulent patterns.

Unsupervised machine learning

Since fraudulent schemes are constantly evolving, supervised machine learning is not enough to recognize newly discovered threats. This is where unsupervised learning steps in. The unsupervised learning technique implies that the model analyzes raw data that is not pre-labelled. As a result, the model learns how to detect non-obvious or subtle fraudulent patterns and is therefore more likely to detect previously unrecognized threats.

Summing up, supervised learning is highly effective in detecting known threats while unsupervised learning is suitable for newly discovered or emerging ones. It is recommended to combine these two methods for higher efficiency. But before you build and start an ML model, you’ll have to collect and prepare the data that the model will work with. Remember: the bigger amount of data means more accurate results since ML is perfect for big data and huge data sets. Also, it is recommended to hire a knowledgeable team of ML experts that have rich experience in providing financial software development services. In this way, you will ensure that the designed ML model is 100% compatible with your system and is reliable and secure.

Once the data is prepared and fed to the model, it will start analysis. Note that work won’t stop here. You’ll have to continuously feed new data to the model to ensure that the system keeps up with evolving threats and can recognize them successfully. One more strong suit of machine learning is real-time analytics that can be immensely useful, especially in cases when the system immediately alerts you upon detecting a threat.

AI fraud detection methods

Now that we’ve discussed how exactly artificial intelligence processes the data, let’s look at the most common fraud detection methods that are powered by this technology:

AI-based behavioral analytics

One of the cornerstones of AI-powered fraud detection is the ability of ML models to analyze user behavior and transaction patterns and identify any suspicious activity or alterations from usual spending habits. Examples include transactions from unusual locations or atypical amounts of transactions. In such cases, machine learning algorithms flag the transaction as fraudulent and immediately report and block it, thus preventing potential financial losses.

To effectively train your ML model and obtain needed results, first you’ll need to collect and prepare your data set. As well, you’ll need to define the data points that are to be monitored for anomalies. They normally include the IP address, devices in use, configurations of used systems and browsers, etc. The more information you “feed” to the ML model and monitor, the more accurate the results will be.

Predictive analytics

One more important aspect of using artificial intelligence in fraud detection is predictive analytics. Predictive analytics means the analysis of big data by using machine learning models with an aim to create an accurate prediction of possible future events.

In banking, predictive analytics can help with the following:

- Conduction of SWOT analysis with an aim to identify potential weaknesses and ways to resolve them;

- Identification of fraudulent activities that will most likely occur based on your historical data;

- Anticipation of customer behavior;

- Assessment of credit risks and one’s credit worthiness.

Mostly, predictive analytics is used in lending though it can help highlight other risk areas that might be overlooked.

Simulation models

One more powerful technique included in the process of fraud detection using AI in banking is the creation of a virtual environment that mimics the real one. By using this virtual environment, banks can safely test their fraud prevention strategies and simulate cyber attacks to see how well their environment is protected. The best part about such simulation models is that banks do not risk their financial assets but at the same time, can quite accurately predict risks and evaluate the effectiveness of their fraud detection tools.

Expert Opinion

The latest developments in AI have made fraud detection increasingly challenging. This is largely because generative AI models significantly enhance the ability to create fraudulent content, while offering limited assistance in combating fraud. The nature of the latest large language models (LLMs) and other generative models plays a role in this: they rely on associative rules, which are useful for creative tasks (and fraud can be seen as a creative task to some extent), but are notorious for generating hallucinations. These hallucinations pose a serious risk when working with sensitive banking data, making the application of such models highly limited due to the potential dangers.

Most common cases of fraud in banking

Artificial intelligence is, without a doubt, a highly valuable asset for any banking institution. But it’s not enough to just design and implement ai in banking fraud detection. To make the most of this technology, banks first need to recognize and understand the variety of possible threats that they need to focus on.

Generative AI and deepfakes

One of the biggest concerns in the banking industry today is the growing threat of generative AI (GenAI for short) and the creation of deepfakes. A brief recap of these terms before we move on:

- Generative AI is a subset of artificial intelligence that is used to generate content in various forms, including text, images or audio.

- Deepfake refers to an artificial image or video, generated (or edited) by using AI tools.

So, what’s the big deal and how do GenAI and deepfakes pose a threat to banks?

You might have heard of a case when, in January 2024, an employee of a Hong-Kong based firm transferred $25 million to fraudsters, fully believing that they were on a call with their boss. Needless to say, the boss and other people on the call were generated by AI, which is both terrifying and impressive, to be honest. And now that GenAI is rapidly evolving, banks and their customers are exposed to newly emerging frauds like deepfake videos, audio calls, or documents. According to Deloitte, the fraud losses from GenAI might reach US$40 billion in the USA by 2027, with GenAI email fraud losses singlehandedly reaching US$11.5 billion by the same date.

Other examples of banking fraud

In addition to Generative AI and deepfakes, there are other threats that banks must consider when planning their cybersecurity strategy. These threats include:

- Identity theft: implies that fraudsters steal your personal information (basically, your “identity”) to perform unlawful activities (i.e., applying for a loan under your name).

- Phishing: a practice of sending fraudulent emails (or other forms of messages) that persuade a receiver to take a certain action, most often leading to the reveal of personal information.

- Account takeover: similar to identity theft where a malicious agent gets access to your credentials and basically takes over your personal account.

- Payment fraud: a process of performing unauthorized transactions due to the theft of one’s payment information.

- Money laundering: a process of illegally concealing the origin of money from unlawful activities.

As you can see, types of fraud in banking vary but most of them are centered around accessing one’s personal information. Hence, banks need to build their security strategy accordingly and ensure that both employees and customers understand the risks and know how to respond to threats correctly.

How companies improve fraud detection using AI: real-life case studies

Below we list the most prominent use cases of companies already deploying AI and machine learning to reduce and mitigate fraud and the negative impact that it causes:

American Express

Considering the growing number of credit card frauds that cost US customers roughly $11 billion per year, American Express decided to use machine learning to improve its fraud detection processes. The company deployed deep learning models to develop a powerful AI-based fraud detection tool. The tool combines RNNs with LSTMs for immediate detection of anomalies in massive volumes of transactional data. As a result, American Express was able to improve fraud detection accuracy by 6% in certain segments and is now using an improved and more powerful ML model called Gen X.

PayPal

Another example of a global financial company using AI for fraud detection is PayPal. Back in 2019, the company partnered with a reliable software provider to design a fraud detection solution that would function 24/7 across the globe and would be able to detect potential fraud in real time. As a result, PayPal was able to not only cover massive volumes of customer transactions but also to lower the server capacity, eventually being able to improve real-time fraud detection by 10%. Today, PayPal offers a variety of risk and fraud management solutions to ensure their clients’ data and transactions remain as safeguarded as possible.

The US Department of Treasury

In Fiscal Year 2023, the US Department of Treasury managed to recover $375M by implementing AI in its fraud detection process. The decision was impacted by the fact that in 2021, due to the pandemic, the check fraud has drastically increased by 385%. To address the issue, the Treasure used artificial intelligence and has been enhancing its fraud detection policies since then.

Main challenges and considerations of using AI for banking

While artificial intelligence fraud detection proves to be a highly efficient tool, it also comes with certain limitations and challenges. The most prominent are:

Data privacy and regulatory concerns

Just as any other solution, financial fraud detection software based on AI needs to comply with relevant regulations, GDPR being the most known one. Needless to say that potential loss or compromised personal data can lead to major consequences, including both financial losses and a significant hit on the company’s reputation. Hence, the implemented AI solution needs to comply with needed regulations and preferably be transparent and explainable.

Potential bias

Another concern related to AI in banking and finance is the high possibility of biased decisions since ML algorithms are programmed by people. The possibility of bias means that certain customer segments may be treated differently or unfairly, which, in turn, will impact the company’s reputation and level of trust. A good way to minimize bias is to use diverse and representative sets of data that will help ensure fairness of the fraud detection process.

Future trends in AI fraud prevention

Let’s wrap up with a few trends that are most likely to be expected in the future of AI in banking.

AI-driven customer awareness

Banking frauds can be corporate (like an external hacker attacking the banking system) or non-corporate. The latter includes stolen PINs, identity theft, phishing and other frauds, related directly to the customers and their personal information. In order to minimize the possibility of risks, customers should know about their nature and the reasons why these threats are most likely to occur. Artificial intelligence can be used to both alert customers immediately about any suspicious activity and to educate them on basic cybersecurity. AI-driven client awareness is an important aspect of cybersecurity that banks should not overlook.

Collaborative fraud prevention

To minimize and prevent threats, all bank employees need to work together, and the adopted cybersecurity strategy should be implemented on all levels. Even the smallest mistake made by an employee can lead to tremendous consequences in terms of security and thus, everyone who has access to personal information and the banking system should work collaboratively on fraud prevention. One of the ways to leverage security awareness is to provide employee training on a regular basis.

Focus on explainability

One more trend related to the use of AI in fraud detection is explainability and transparency. Since some ML models have the “black box” issue (meaning, the reasoning behind delivered outcomes cannot be explained properly), data scientists will work on making AI more explainable and thus, more trustworthy.

In conclusion

AI fraud detection is a powerful tool that banks can effectively use. However, the sole implementation of this technology is not enough. To successfully battle fraud, banks need to review and redesign their cybersecurity strategy, adapting it to the changing landscape of fraud attacks and becoming proactive instead of reactive.

Comments