Table of Contents

Neural networks have drastically transformed how computers process information by imitating the way humans perceive information and learn. However, as the field of artificial intelligence continues to advance, the need for more specialized types of neural networks grows.

To better understand what the future brings us in terms of deep learning, let’s explore the depths of the diversity of neural networks, their main advantages and challenges.

What are artificial neural networks?

Artificial neural networks (ANNs) are computational models designed to emulate the way our brain functions. These networks form a subset of machine learning and serve as the foundation of deep learning algorithms.

At its core, an ANN consists of interconnected nodes (artificial neurons) that are responsible for information processing. These nodes receive input signals, perform a computation, and deliver an output signal. The outputs are then passed on to other neurons, thus creating a network of interconnected layers.

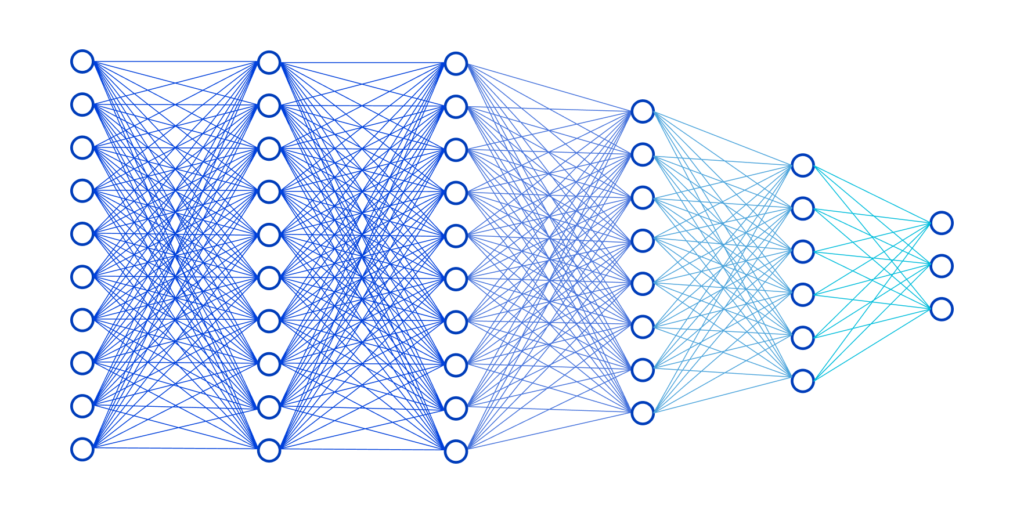

There are typically several layers in an artificial neural network:

- Input layer – receives external information and processes, analyzes, or classifies it before passing it to the next layer.

- Hidden layers – perform complex computations and transformations on the input data, extracting valuable features and patterns.

- Output layer – produces the desired outcome or prediction based on the processed information.

As for the node, it includes:

- Input data: signals received by the node, which could be features from the external environment or outputs from previous nodes in the network.

- Weights: the numbers that represent the strength of the connections between the node’s inputs and output.

- Threshold: a predefined value that determines whether the node’s output is activated. If the sum of the weighted inputs exceeds the threshold, the node activates and produces an output; otherwise, it remains inactive.

One of the key strengths of artificial neural networks is their ability to learn from data. During training, the network adjusts the strength of the connections between neurons based on the input data and the desired output. Through parallel processing and adaptive learning the network is able to recognize patterns, interpret complex data, make accurate predictions, and solve other intricate tasks. This iterative learning process allows the network to adapt and improve its performance over time.

Types of artificial neural network

There are various types of neural networks in machine learning, each sharing the common goal of mimicking the human brain’s function to address complex problems. However, they differ in complexity, use cases, structure, modeling of artificial neurons, connections between nodes, data flow, and node density. Let’s take a closer look at some of the neural network types.

Feedforward Neural Networks (FNN)

Feedforward neural networks (FNN) represent a fundamental and simplest type of neural network. They consist of interconnected nodes, also known as neurons, organized in layers. Each neuron in a layer is connected to every neuron in the subsequent layer, and these connections are associated with weights that are adjusted during the training process.

The network operates in a feedforward manner, meaning the input signals are propagated through the network layer by layer, without any feedback loops. Each neuron applies a weighted sum of its inputs, followed by an activation function that introduces non-linearity, allowing the network to learn complex patterns and relationships within the data.

Training an FNN involves presenting it with a set of input data along with the corresponding desired outputs and adjusting the weights to minimize the difference between the predicted and actual outputs. This process, known as backpropagation, uses optimization algorithms such as gradient descent to update the weights iteratively, gradually improving the network’s ability to make accurate predictions.

One of the primary advantages of FNNs is their ability to learn complex, non-linear relationships within data, making them suitable for a wide range of tasks. However, FNNs can be computationally intensive, requiring substantial computational resources for training and inference. Additionally, they may face challenges in handling sequential data and capturing long-term dependencies, prompting the development of more advanced architectures such as recurrent neural networks (RNNs) and transformers.

Convolutional Neural Networks (CNN)

CNNs are a class of deep neural networks that are specifically designed to process and analyze visual data. The key components of a CNN include convolutional layers, pooling layers, and fully connected layers.

- Convolutional Layers: These layers apply a set of learnable filters to the input data. Each filter performs a convolution operation, capturing spatial hierarchies and detecting features such as edges, textures, and patterns within the input images.

- Pooling Layers: Pooling layers downsample the feature maps generated by the convolutional layers, reducing the dimensionality of the data and preserving the most relevant information. Common pooling operations include max pooling and average pooling.

- Fully Connected Layers: These layers integrate the high-level features extracted by the previous layers to make final predictions or classifications.

CNNs have found widespread applications across various domains:

- Image Recognition: CNNs excel at tasks such as object detection, facial recognition, and image classification. They have been pivotal in enabling technology for applications like photo tagging, content-based image retrieval, and biometric authentication systems.

- Medical Imaging: In the healthcare sector, CNNs have been instrumental in diagnosing diseases from medical images such as X-rays, MRIs, and CT scans. They aid in identifying anomalies, tumors, and other critical features, assisting medical professionals in making accurate and timely diagnosis.

- Autonomous Vehicles: CNNs play a crucial role in the development of self-driving cars by enabling them to perceive and interpret the surrounding environment. They help to recognize pedestrians, traffic signs, and obstacles on the road, contributing to the safety and efficiency of autonomous driving systems.

Recurrent Neural Networks (RNN)

Unlike traditional feedforward neural networks, RNNs possess connections that loop back on themselves, allowing them to retain a memory of previous inputs. This characteristic makes RNNs inherently capable of capturing temporal dependencies and patterns within sequential data, making them ideal for tasks that involve analyzing and generating sequences.

The versatility of RNNs renders them invaluable across various domains. In natural language processing, RNNs are leveraged for tasks such as language modeling, machine translation, and sentiment analysis. By virtue of their ability to understand and generate sequences, RNNs can effectively capture the contextual nuances and dependencies present in human language.

Furthermore, RNNs find extensive utility in speech recognition systems, wherein they play a pivotal role in processing and interpreting spoken language. They excel at modeling the temporal dynamics of speech signals, thereby enabling accurate transcription and understanding of spoken words and phrases.

In addition to language-related tasks, RNNs are widely employed in time series prediction and forecasting. Whether it pertains to financial market trends, weather patterns, or stock price movements, RNNs can analyze historical data to make informed predictions about future outcomes, thereby facilitating decision-making and strategic planning.

Long Short-Term Memory (LSTM) Networks

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) that has gained significant attention in the field of deep learning due to their ability to effectively model sequences and time series data. LSTMs are designed to address the vanishing and exploding gradient problems that plague traditional RNNs, making them particularly well-suited for tasks such as speech recognition, language modeling, and financial forecasting.

LSTM networks consist of memory cells that are capable of storing information over long periods of time. Unlike traditional RNNs, LSTMs have the ability to selectively retain or discard information through the use of gates, which include the input gate, forget gate, and output gate. These gates regulate the flow of information into and out of the memory cell, allowing LSTMs to capture long-range dependencies in data.

LSTMs have found widespread application in speech recognition and language modeling. In speech recognition, LSTMs can model complex dependencies in audio sequences, improving accuracy in transcribing spoken language. In language modeling, LSTMs are employed to predict the likelihood of a word or sequence of words given the context, aiding in tasks such as text generation, machine translation, and sentiment analysis.

Gated Recurrent Units (GRU)

Gated Recurrent Units (GRU) is also a type of RNN and represents a simplified version of long short-term memory networks. Similar to LSTMs, GRUs are designed to overcome the vanishing gradient problem in traditional RNNs, but they achieve this with a more streamlined architecture.

GRUs use gating mechanisms to selectively update and reset their internal states. This allows them to better capture long-term dependencies in the data and avoid the problem of information loss over time. This gating mechanism involves fewer parameters compared to LSTMs, making GRUs computationally more efficient.

Autoencoders

Autoencoders are designed for unsupervised learning and dimensionality reduction. The primary purpose of autoencoders is to learn efficient representations or encodings of input data, compressing it into a lower-dimensional space while retaining essential features. This process involves an encoder to compress the data and a decoder to reconstruct it back to the original form:

- Encoder: takes the input data and transforms it into a compressed, lower-dimensional representation. It consists of layers of neurons that progressively capture relevant features of the input.

- Decoder: takes the compressed representation produced by the encoder and reconstructs the original data. Like the encoder, it comprises layers that map the compressed representation back to the input space, aiming to reproduce the initial data as accurately as possible.

Autoencoders are successfully applied in various domains, including image recognition, speech processing, and recommendation systems.Their adaptability and efficiency make them invaluable assets in extracting meaningful insights from complex datasets.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are a class of artificial intelligence algorithms that operate within an adversarial training framework. This framework involves two neural networks, a generator, and a discriminator, engaged in a competitive and cooperative learning process. The generator aims to create realistic data, while the discriminator endeavors to distinguish between real and generated data. Through this adversarial process, both networks improve their performance iteratively.

GANs are widely employed in image generation tasks, creating realistic and high-quality images. They are used in areas such as generating artwork, faces, and even entirely synthetic scenes. The generated images often exhibit a remarkable level of realism and diversity.

GANs are also applied to style transfer, where the goal is to apply the artistic style of one image to another. This results in the creation of visually compelling and stylized images, merging the content of one image with the artistic characteristics of another.

Radial Basis Function Neural Networks (RBFNN)

The architecture of RBFNNs consists of three layers: the input layer, the hidden layer, and the output layer. The input layer receives the input data, which is then transmitted to the hidden layer. The hidden layer contains a set of radial basis functions, which are responsible for transforming the input data into a higher-dimensional space. These functions are centered at specific points in the input space and have a radial symmetry.

The transformed data is then passed to the output layer, where the final computation is performed to produce the network’s output. The output layer typically uses a linear combination of the hidden layer’s outputs, weighted by adjustable parameters, to generate the final predictions or classifications.

One of the key advantages of RBFNNs is their ability to efficiently approximate complex functions with a relatively small number of parameters. This makes them particularly effective for tasks involving non-linear data, where traditional neural networks may struggle to converge to an optimal solution.

RBFNNs are also known for their ability to function approximation and time series prediction.

- Function approximation. RBFNNs estimate unknown functions by using observed data points from the domain. Their radial basis functions help model complex relationships, making them useful in various applications like mathematical modeling and signal processing.

- Time series prediction. RBFNNs effectively analyze historical data with temporal sequences for predicting future trends. It is especially useful in the finance sphere and weather forecasting, providing insights for decision-making based on past observations.

Modular neural networks

At its core, a modular neural network is characterized by its modular architecture, which involves breaking down the network into interconnected modules, each responsible for specific tasks or sub-problems. These modules can be designed to function autonomously or collaborate with other modules to collectively solve more complex problems. This modular approach mirrors the concept of modularity in biological systems, where distinct components work in tandem to achieve a unified goal.

The modularity of neural networks offers several advantages, such as improved reusability of modules across different tasks, enhanced interpretability of the network’s behavior, and the potential for efficient parallel processing. Furthermore, modular neural networks can exhibit increased robustness, as the failure of a single module may not lead to catastrophic system failure, unlike traditional monolithic networks

Choosing the right ANN type for the task

As mentioned earlier, ANNs are a powerful tool in machine learning and artificial intelligence. They serve various purposes, including pattern recognition, classification, and prediction. However, selecting the appropriate type of ANN for a specific task is crucial for achieving optimal results. Several factors influence this selection process, and understanding these factors will help you to choose the right option.

- Nature of the data. The type of data you handle is important. The appearance of the data, its quantity, and whether it arrives sequentially or as images all play a role in selecting the most appropriate neural network. For instance, if you’re working with images, CNNs are more fitting, while RNNs are preferable for sequential data.

- Interpretability of results. In some applications, the interpretability of the results is crucial. For instance, in medical diagnosis or financial forecasting, it is essential to understand how the model arrives at its predictions. In such cases, it’s better to use simpler ANN architectures over complex deep learning models.

- Task complexity. The complexity of the task at hand influences the choice of neural network. Simple tasks, like basic pattern recognition, may be efficiently handled by FNNs. For more complex tasks requiring memory or understanding of long-term dependencies, recurrent or attention-based models like LSTMs or Transformers might be more suitable.

- Training data size. The size of the training dataset also plays a role in selecting the appropriate neural network. Deep learning models, like deep neural networks, usually demand substantial data for effective training. In situations with limited data, transfer learning or simpler architectures will work better.

- Computational resources. The availability of computational resources also dictates the choice of ANN type. Training deep neural networks often requires substantial computational power and memory. Therefore, in resource-constrained environments, simpler architectures or techniques might be more feasible.

Challenges of ANN models

Despite their widespread use and effectiveness, artificial neural networks also present several challenges:

Overfitting and generalization

One of the primary challenges of ANN models is overfitting, where the model performs well on the training data but fails to generalize to unseen data. Overfitting occurs when the model captures noise and irrelevant patterns from the training data, leading to poor performance on new data.

Data quality and quantity

The performance of all types of neural networks in deep learning heavily depends on the quality and quantity of the training data. Insufficient or noisy data can lead to biased or inaccurate model predictions. Additionally, the availability of labeled data for supervised learning tasks can be a significant hurdle, especially in domains where data collection is expensive or time-consuming. Addressing these challenges may involve data augmentation, transfer learning, or semi-supervised learning approaches to make the most of limited training data.

Computational complexity and training time

Training large-scale ANN models with complex architectures often requires substantial computational resources and time. This challenge is particularly pronounced in deep learning, where training deep neural networks with millions of parameters can be computationally intensive. Researchers are constantly exploring ways to optimize model architectures and develop hardware accelerators to reduce training time and make ANN models more accessible and practical for real-world applications.

Interpretability and explainability

Despite their remarkable performance, artificial neural networks are often considered “black box” models, making it challenging to interpret their decisions and provide explanations for their predictions. In applications where transparency and interpretability are important, the lack of explainability can be a significant barrier to the adoption of ANN models.

Ethical and legal considerations

As ANNs continue to be deployed in various domains, ethical and legal considerations surrounding issues such as privacy and accountability have become increasingly important. Biased predictions, unfair treatment of certain groups, and unauthorized use of sensitive data are potential risks associated with the deployment of ANN models.

Expert Opinion

Currently, transformer-based neural networks are predominant in the AI field, particularly in natural language processing. Their exceptional handling of sequential data and ability to comprehend extended context render them highly effective for complex tasks like content generation and language translation.

Concurrently, convolutional neural networks remain essential for image and video analysis due to their proficiency in detecting patterns and visual features. Anticipated advancements in AI, especially in unsupervised and self-supervised learning, are poised to significantly enhance generative content capabilities. These developments are expected to broaden AI’s adaptability in various domains, such as advertising, social media marketing, and call center operations.

Conclusion

To what extent can we replicate a human brain? Replicating it entirely still remains beyond the current capabilities of technology and scientific understanding. However, the diversity of types of ANN, inspired by the structure and function of the human brain, offers a diverse toolkit for solving different challenges in artificial intelligence and machine learning. Even though these networks still have their limitations, as technology advances, this flexibility continues to drive new possibilities for intelligent systems.

Comments